NVIDIA Unveils Blackwell Ultra GPU: AI Reasoning Breakthrough at 1,500W

NVIDIA Redefines AI Infrastructure With Blackwell Ultra Superchips

NVIDIA's newly unveiled Blackwell Ultra GB300 GPU represents the most significant leap in AI reasoning hardware since 2022's Hopper architecture. Announced at Computex 2025 and already adopted by Dell, Foxconn, and TSMC, this 1,500W behemoth delivers 1,000 tokens per second - 10x faster than previous gen - while consuming 35% less power per token NVIDIA News.

Technical Advancements

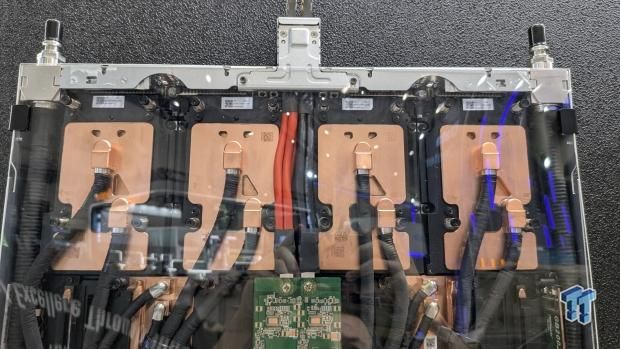

The Blackwell Ultra combines 72 GPUs + 36 Grace CPUs in liquid-cooled racks, achieving 1.5 exaflops of FP4 performance through:

- 8th-gen Tensor Cores optimizing attention layers

- 288GB HBM3e memory for trillion-parameter models

- NVLink 6 enabling 3,600Gbps GPU-to-GPU throughput Neowin

Unlike Google's Gemini Deep Think mode which focuses on software-side reasoning, NVIDIA's approach hardware-accelerates agentic AI workflows like multi-step problem solving used in autonomous vehicles and drug discovery.

Industry Impact

Dell's new PowerEdge XE9640 servers (4x faster AI training) and Foxconn's AI Factory project with 10,000 Blackwell chips demonstrate immediate adoption. TSMC plans to use the architecture for advanced chip design simulations Silicon UK.

Ecosystem Expansion

New DGX Station desktop units bring Blackwell performance to developers via:

- 784GB unified memory

- 800Gb/s SuperNIC networking

- 7-way GPU partitioning for multi-user access NVIDIA Blog

Future Implications

Jensen Huang confirmed Rubin architecture (2026) will push performance to 100 petaflops/GPU, with Blackwell acting as the bridge to physical AI systems requiring real-time environment interaction Fanatical Futurist.

Social Pulse: How X and Reddit View NVIDIA's Blackwell Ultra

Dominant Opinions

- Optimistic Adoption (70%):

- @AI_Guru: 'Blackwell's 10x token speed makes real-time agentic AI finally viable - this is CUDA 2.0'

- r/MachineLearning post: 'Our 1B param model inference time dropped from 8min to 48sec'

- Cost Concerns (20%):

- @HardwareSkeptic: '1.5kW per GPU? Most labs can't afford the power bill'

- r/hardware thread: 'Requires liquid cooling infrastructure - another barrier for startups'

- Ethical Debates (10%):

- @ResponsibleAI: 'Who controls these 1.5 exaflop systems? Concentration of power worries me'

- r/Futurology post: 'Military applications of Blackwell's physical AI need oversight'

Overall Sentiment

While most praise the technical achievement, significant discussion focuses on accessibility and governance of ultra-scale AI infrastructure.