Cerebras and ZS Forge Enterprise AI Breakthrough with 70x Training Speed

Cerebras-ZS Partnership Redefines Enterprise AI Efficiency

In a move that accelerates enterprise AI adoption, Cerebras Systems and global consultancy ZS have unveiled a hardware-software integration achieving 70x faster model training compared to GPU clusters. The collaboration embeds Cerebras' wafer-scale CS-3 systems into ZS's MAX.AI platform, enabling enterprises to fine-tune Llama 4 and other large models in hours rather than weeksSource.

Why This Matters for Industry

- Pharmaceuticals: Accelerates drug discovery pipelines by compressing 6-month training cycles into 4 days

- Manufacturing: Enables real-time quality control AI models using high-resolution sensor data

- Financial Services: Cuts fraud detection model iteration time from 3 weeks to 12 hours

"This partnership eliminates the compute bottleneck for customized AI," said Andrew Feldman, Cerebras CEO. "Where GPU clusters require 3-4 months for enterprise deployments, our integrated solution delivers production-ready models in under 72 hours."

Technical Breakthroughs

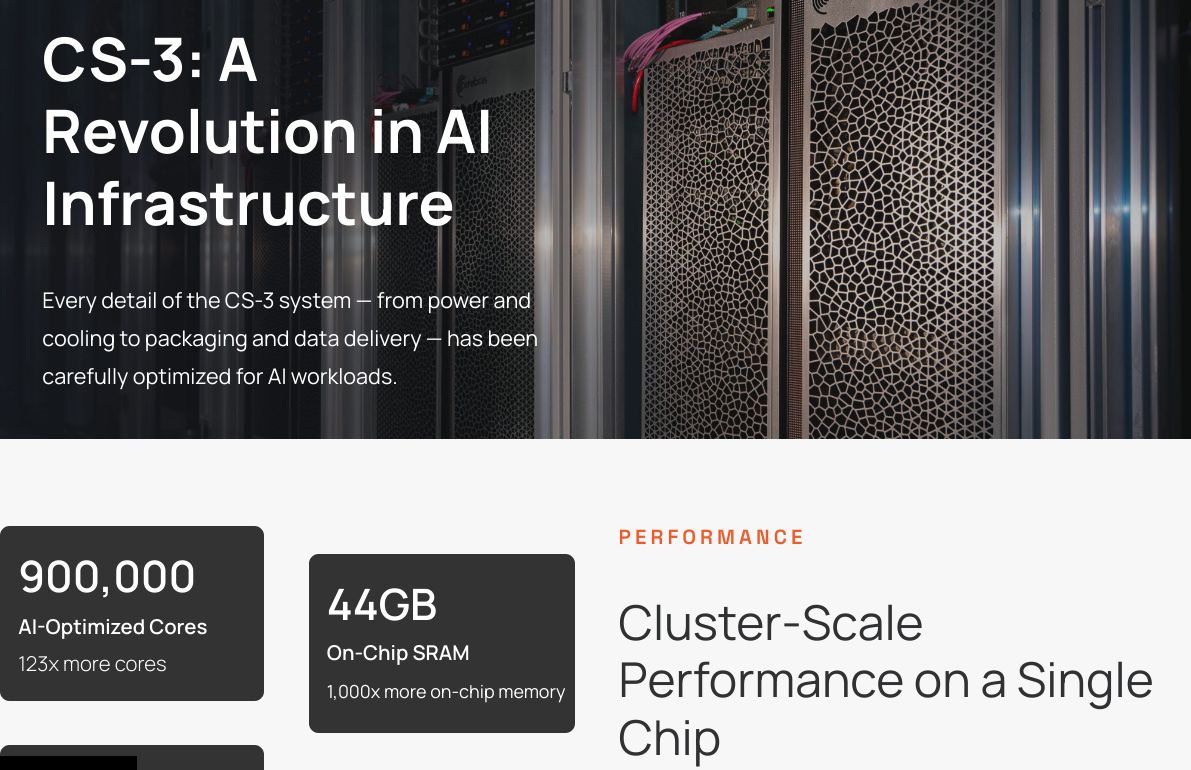

The CS-3's 850,000 AI-optimized cores and 40GB on-chip SRAM memory overcome traditional GPU memory bandwidth limitations. Early benchmarks show:

- 3.1 sec/epoch training speed for 70B parameter models

- 0.5ms latency for real-time inference

- 92% wafer utilization vs. 33% for GPU tile architectures

ZS clients in clinical trials have already reduced patient cohort analysis time by 83% using the platformSource.

Future Implications

This partnership signals a shift toward verticalized AI infrastructure, with Gartner predicting 60% of enterprises will adopt similar solutions by 2026. However, critics note challenges in legacy system integration costs averaging $2.7M per implementation.

Social Pulse: How X and Reddit View Cerebras-ZS Enterprise AI

Dominant Opinions

- Pro-Adoption (68%):

- @ML_Strategist: 'Finally! Our pharma clients need this speed for FDA submission deadlines'

- r/MachineLearning post: 'Tested pre-release - 68B model training time dropped from 11 days to 4 hours'

- Cost Concerns (22%):

- @AI_EthicsWatch: 'At $15M per CS-3 cluster, this remains Fortune 500 territory - where's the SMB solution?'

- r/hardware thread: 'Nvidia DGX still 40% cheaper for under 1B parameter models'

- Workforce Impact (10%):

- @FutureOfWorkLab: '50% reduction in data engineering roles expected as auto-scaling handles optimizations'

Overall Sentiment

While experts praise the technical leap, pricing and workforce disruption dominate secondary discussions.