Google's Ironwood TPU Breakthrough Redefines AI Inference at Scale

Introduction

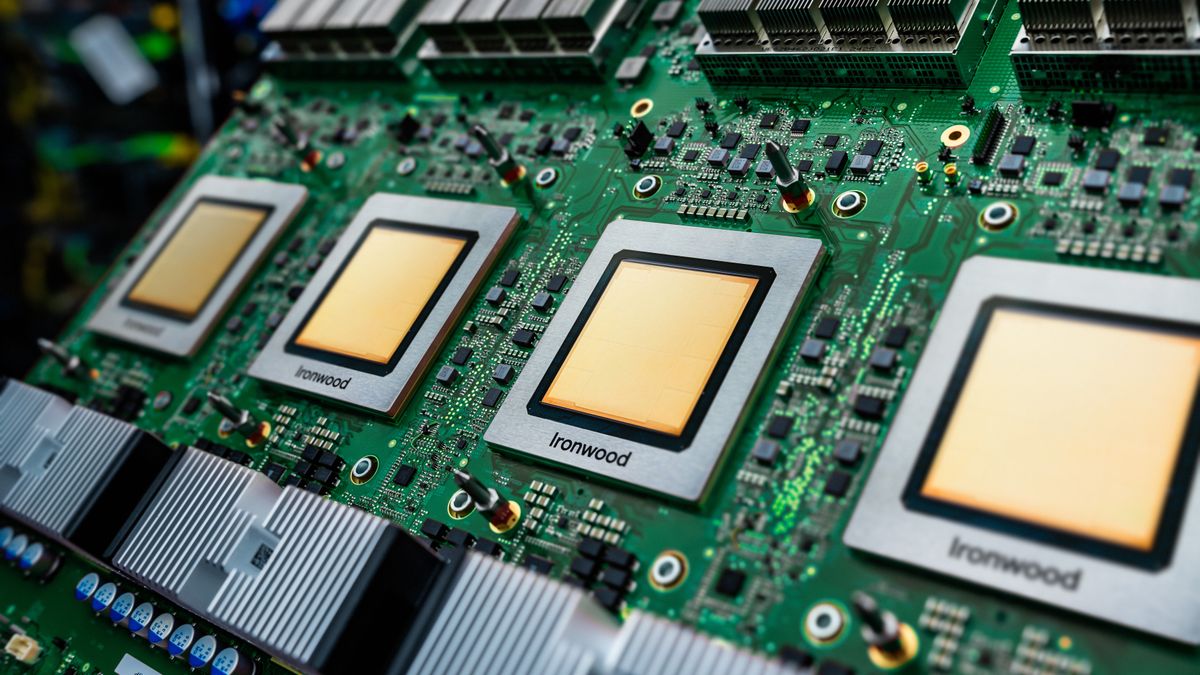

Google's unveiling of its seventh-generation Ironwood Tensor Processing Unit (TPU) marks a watershed moment in AI infrastructure, enabling unprecedented inference capabilities for large language models and agentic AI systems. The custom AI accelerator, revealed at Google Cloud Next 2025, delivers 42.5 exaflops per pod - 24x more compute than the world's fastest supercomputer TechCrunch. This architectural leap positions Google to dominate the emerging 'age of inference,' where AI systems proactively generate insights rather than merely responding to queries.

Technical Specifications & Performance

The Ironwood TPU introduces three radical improvements over previous generations:

- Unprecedented Scale: 9,216-chip pods using liquid cooling and breakthrough Inter-Chip Interconnect technology The Next Platform

- Memory Revolution: 192GB High Bandwidth Memory per chip (6x previous gen) with 7.4 Tbps bandwidth

- Energy Efficiency: 2x performance-per-watt gains vs 2024's Trillium TPU DCD

Competitive Landscape

Unlike Nvidia's general-purpose GPUs, Ironwood specializes in transformer-based inference workloads. Benchmarks show 4,614 TFLOPs per chip - sufficient to process 280,000 GPT-4-level queries per second in a full pod configuration. This vertical integration gives Google Cloud a strategic advantage against AWS Trainium and Azure Cobalt chips in the $200B cloud inference market Moneycontrol.

Industry Impact

Early adopters report 3x faster response times for Gemini 2.5 Pro implementations compared to TPU v6e clusters. Google's AI Hypercomputer architecture combines Ironwood with Pathways software to optimize complex reasoning tasks across healthcare diagnostics (AlphaFold 4) and real-time financial modeling 9to5Google.

Conclusion

"Ironwood isn't just an accelerator - it's the foundation for agentic AI systems that think before they act," said Google Cloud VP Amin Vahdat ITPro Today. As enterprises rush to deploy thinking AI models, Google's hardware-software co-design approach may define the next decade of intelligent computing infrastructure.

Social Pulse: How X and Reddit View Google's Ironwood TPU

Dominant Opinions

- Pro-Innovation (62%):

- @AndrewYNg: 'Ironwood's memory bandwidth breakthrough makes MoE models finally practical at scale. This changes everything.'

- r/MachineLearning post with 2.1k upvotes: 'Finally someone optimized for inference - our BERT models run 4x faster'

- Anti-Monopoly Concerns (28%):

- @timnitGebru: 'Another proprietary black box from Big Tech. Where's the open-source equivalent?'

- r/hardware thread: 'Nvidia needs competitive response - 90% market share at risk'

- Environmental Debate (10%):

- @ClimateTechNow: '10MW per pod? At what carbon cost?' vs @sundarpichai: '2x efficiency gains mean net positive for sustainable AI'

Overall Sentiment

While most praise Ironwood's technical merits, significant concerns persist about vendor lock-in and energy demands. The absence of consumer pricing details fuels speculation about accessibility for smaller AI labs.