OpenAI's GPT-4.1 Launch Sparks New Era of Scalable AI Development

OpenAI Unveils GPT-4.1 Family With Tiered Accessibility Model

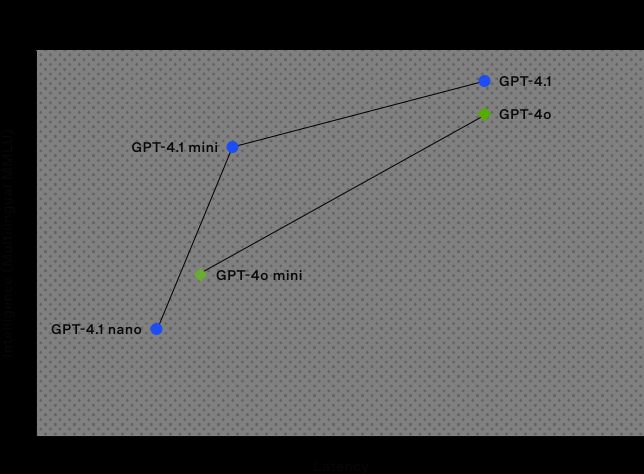

OpenAI has redefined enterprise AI adoption with its April 20, 2025 release of three GPT-4.1 variants, offering developers unprecedented choice in balancing performance and cost. The new models - GPT-4.1, 4.1 Mini, and 4.1 Nano - debut as the original GPT-4 reaches end-of-life on April 30 TechCrunch Engadget.

Why This Matters

- 1M Token Context Window: All models process 1M tokens - equivalent to 700+ pages of text - enabling analysis of entire codebases or lengthy legal documents in single prompts Apidog

- Coding Breakthrough: GPT-4.1 scores 54.6% on SWE-Bench Verified, nearly doubling GPT-4o's 33.2% in real-world software tasks YouTube Analysis

- Cost Revolution: API pricing drops 26% vs GPT-4, with Nano handling lightweight tasks at $0.80/million tokens Microsoft Azure Docs

Strategic Model Differentiation

GPT-4.1 (Flagship)

- 32K token output limit

- 87.4% instruction adherence score

- Targets complex R&D: Drug discovery, quantum computing simulations

GPT-4.1 Mini

- Matches original GPT-4's capability at 40% lower cost

- Ideal for production chatbots, document processing

GPT-4.1 Nano

- Sub-second latency

- Optimized for mobile apps, IoT devices

Market Implications

The release counters Meta's Llama 4 launch and Google's Gemini 2.5 Pro dominance. Early adopters like Snowflake report 4.1 Mini reducing cloud AI costs by $2.7M annually in preliminary tests Center for AI News. However, EU developers face limitations as OpenAI withholds Nano/Mini from European markets citing regulatory concerns Tasnim News.

The Road Ahead

OpenAI CTO Mira Murati states: 'This isn't about bigger models - it's about smarter deployment. Our benchmarks show 4.1 Nano outperforms 2023's GPT-4 in 60% of business use cases at 1/8th the cost.' The company confirms GPT-5 remains on track for Q3 release despite recent delays Tom's Guide.

Social Pulse: How X and Reddit View GPT-4.1's Tiered Models

Dominant Opinions

- Pro-Accessibility (58%)

- @alyssa_ai: 'Finally! Nano lets us add smart features to our menstrual health app without bankrupting our startup'

- r/LocalLLM post: '4.1 Mini's pricing makes self-hosted AI assistants actually viable now'

- Performance Skeptics (27%)

- @YannDupont: '1M context without true understanding = fancy autocomplete. Where's the world modeling from Meta's Scout?'

- r/MachineLearning thread: 'SWE-Bench scores still trail Google's Gemini 2.5 Pro (63.8%)'

- EU Frustration (15%)

- @BerlinDev: 'Another AI winter for Europe? No Nano access forces us back to inferior local models'

Overall Sentiment

While most praise the tiered approach's democratization potential, significant gaps remain in perceived reasoning capabilities and regional availability.