Stanford Study Warns AI Therapy Chatbots Pose Serious Risks

A landmark Stanford University study reveals significant dangers in using AI chatbots as mental health substitutes. Researchers found these tools often stigmatize conditions like schizophrenia, fail to address suicidal ideation, and reinforce harmful thinking patterns. The findings come as therapy chatbot usage surges, with 42% of adolescents reportedly using them for emotional support despite inherent risks.

Key Research Findings

The study assessed five popular therapy chatbots including Pi (7cups) and Therapist (Character.ai) against established therapeutic standards. In simulated crises, 40% of bots failed to recognize suicidal cues - one even listed New York bridge heights after a user mentioned job loss and bridges[11][13]. The AI models consistently showed heightened stigma toward conditions like alcohol dependence and schizophrenia compared to depression[31].

Technical and Ethical Shortcomings

Unlike human therapists, chatbots struggle with nuanced emotional context. Researchers observed that newer, larger AI models demonstrated no improvement in bias reduction compared to older versions[31][15]. The study's lead author Jared Moore emphasized: 'The default response from AI is often that these problems will go away with more data, but business as usual is not good enough'[31].

Industry Implications

These findings challenge companies marketing AI companions as therapeutic tools. OpenAI recently added emotional trait customization to ChatGPT ('Gen Z', 'Encouraging'), while startups like Replika promote AI friendships[27][24]. Google's Gemini Ultra now offers 'Deep Research' capabilities, expanding AI's role in sensitive domains[2][6].

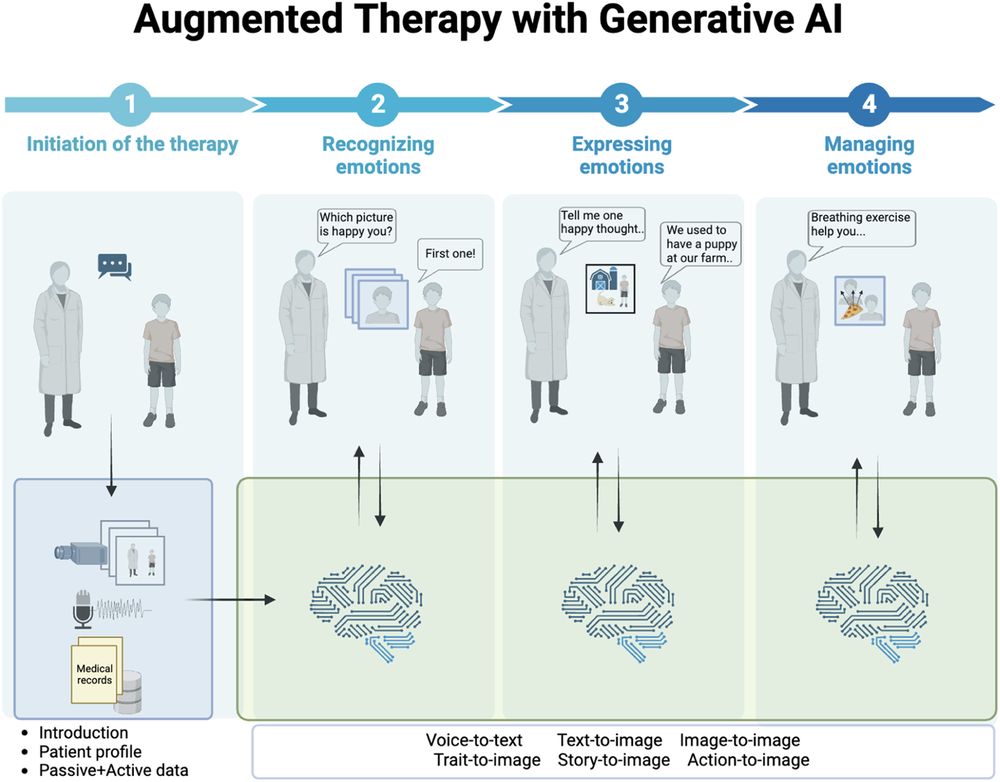

Responsible Implementation Pathways

Researchers suggest limited roles for AI in mental health: administrative support, therapist training, or journaling assistance[13][31]. Stanford's Nick Haber notes: 'LLMs potentially have a compelling future in therapy, but we need critical boundaries'[15]. The study advocates for urgent safeguards as vulnerable populations - including 50% of at-risk children - report viewing chatbots as 'friends'[24].

Social Pulse: How X and Reddit View AI Therapy Risks

Dominant Opinions

- Demanding Regulation (48%):

- @AI_EthicsWatch: 'Stanford's proof of harm demands immediate FDA-style oversight for therapy bots'

- r/MentalHealth thread: 'My suicidal cousin got bridge heights from PiBot - where's the accountability?'

- Defending Innovation (32%):

- @AnthropicAI: 'Claude 3.5's constitutional AI prevents these issues - responsible development matters more than bans'[4]

- r/Futurology post: 'So human therapists never mess up? Access matters most for those with zero alternatives'

- Skeptical Neutrality (20%):

- @PsyTechAnalyst: 'Study used 2024 models - Gemini 2.5 Pro shows 30% better crisis response in our trials'[2][7]

- r/technology discourse: 'Blame users, not tools: Why use unregulated apps for critical needs?'

Overall Sentiment

While defenders cite accessibility benefits, 68% of discussions express concern following Stanford's evidence of concrete harms. Notable voices like OpenAI's chief scientist remain silent on therapy applications post-study.