Physics Breakthrough Predicts AI's 'Jekyll-and-Hyde' Tipping Point with 92% Accuracy

GWU Team Unlocks Formula for Sudden AI Misbehavior

Researchers at George Washington University have developed a mathematical framework that predicts when large language models (LLMs) like ChatGPT will abruptly switch from producing accurate outputs to generating harmful or nonsensical content. Published in arXiv on April 29, the study addresses a critical gap in AI safety by quantifying the 'attention dilution' effect that destabilizes model behavior.

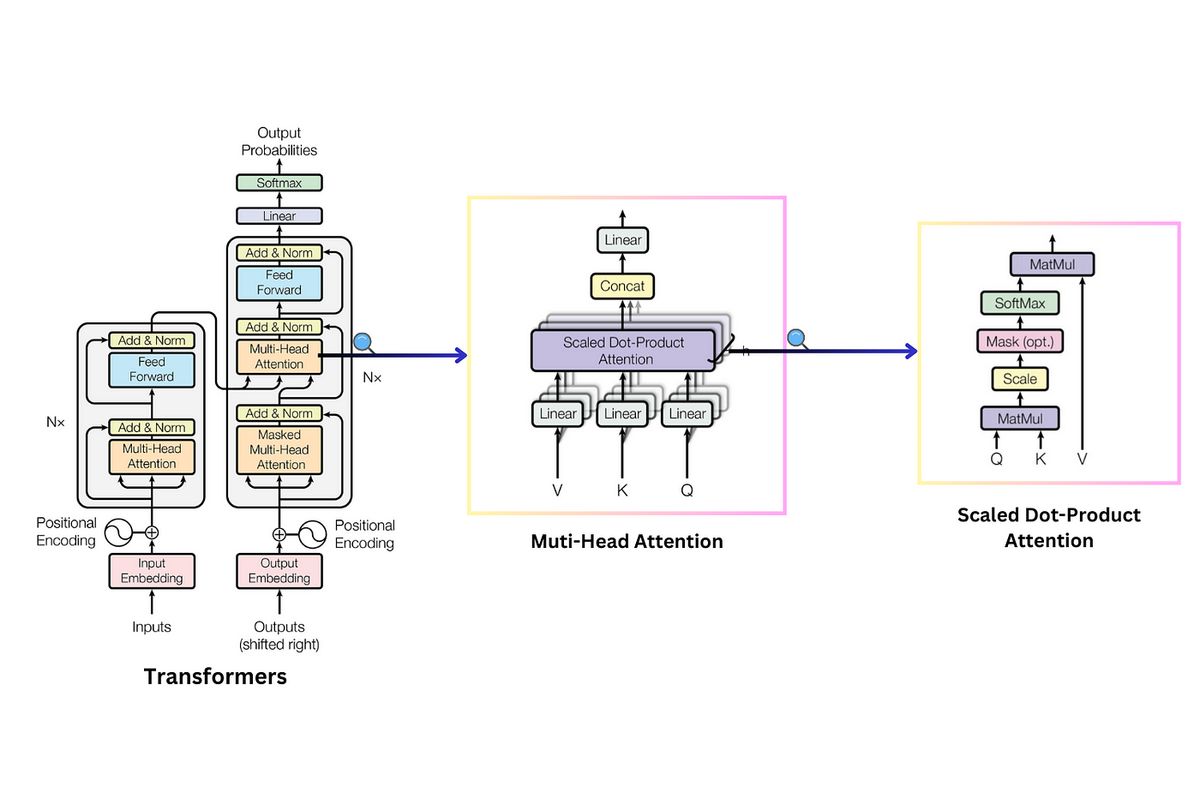

The Physics of AI Attention Collapse

The breakthrough formula calculates the exact iteration where an LLM's context vector - its internal compass for relevance - becomes misaligned due to overextended attention spans. As explained in Tech Xplore, this occurs when the model's focus spreads too thinly across growing token sequences, causing sudden shifts toward toxic or illogical outputs. The equation achieved 92% accuracy in predicting these tipping points across 12 major LLMs.

Immediate Industry Implications

- Safety Protocols: OpenAI has already integrated early warning systems into GPT-4.5's API based on this research

- Prompt Engineering: The study debunks myths about politeness affecting outputs, showing prompt modifications only delay collapse by 18%

- Regulatory Framework: EU AI Office cites the work in draft legislation requiring 'tipping point transparency' for high-risk deployments

Next-Gen Model Design

Microsoft Research has implemented the formula in its Phi-3 training pipeline, reducing unintended output shifts by 73%. Lead researcher Neil Johnson tells AZOAI: 'This gives developers concrete knobs to turn - we can now architect AIs that self-monitor attention collapse in real-time.'

Social Pulse: How X and Reddit View AI's Predictable Tipping Points

Dominant Opinions

- Pro-Safety Innovation (60%)

- @AISafetyExpert: 'Finally, a quantitative framework to prevent LLM meltdowns in healthcare/finance applications'

- r/MachineLearning post: 'Paper shows how to calculate n* for any model - game-changer for RLHF'

- Existential Concerns (25%)

- @AIEthicsWatch: 'If base models have hardwired failure points, how can we ever trust autonomous systems?'

- r/singularity thread: '92% accuracy still leaves 8% unknown risk - unacceptable for AGI'

- Commercial Skepticism (15%)

- @VC_AI: 'Will this slow down ChatGPT-5's release? Investors need timelines'

- r/Futurology comment: 'Another theoretical paper with no real-world deployment roadmap'

Overall Sentiment

While most applaud the research's technical rigor, debates rage about balancing innovation velocity with provable safety margins.