NVIDIA's DiffusionRenderer Unlocks Photorealistic Scene Editing From Single Videos

NVIDIA's DiffusionRenderer Unlocks Photorealistic Scene Editing From Single Videos

NVIDIA has unveiled DiffusionRenderer, a revolutionary neural rendering framework that enables precise editing of 3D scenes and photorealistic images from single video inputs. This breakthrough eliminates the need for complex 3D assets, allowing creators to manipulate lighting, materials, and geometry with unprecedented realism – potentially transforming film production, gaming, and virtual reality workflows.

How It Works

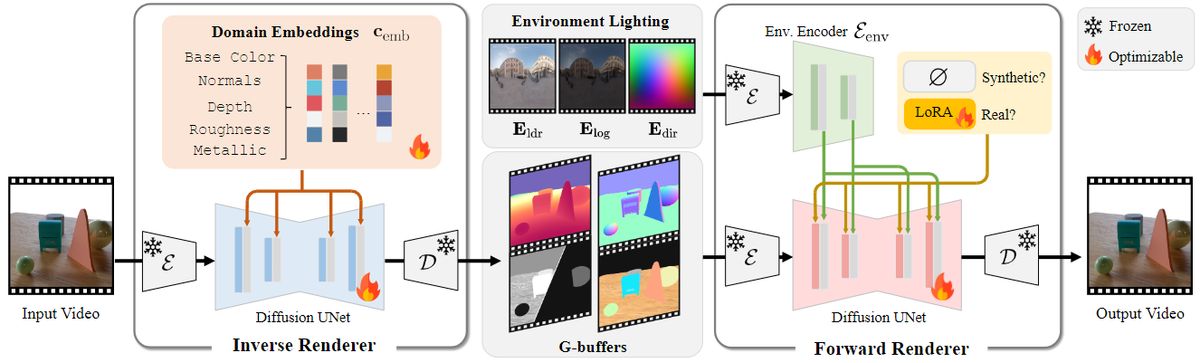

Unlike traditional physically based rendering (PBR) that requires precise 3D geometry and lighting data, DiffusionRenderer uses video diffusion models to estimate intrinsic scene properties like geometry, material buffers, and lighting through inverse rendering. The system then applies these properties during forward rendering to generate new scenes under different conditions. According to NVIDIA researchers, this approach "bypasses limitations of traditional graphics pipelines" by learning from synthetic and auto-labeled real-world data. Tech Xplore

Performance Milestones

Quantitative evaluations demonstrate DiffusionRenderer's superiority:

- Achieves PSNR of 28.3 on synthetic objects and 26.0 on synthetic scenes

- Improves albedo estimation PSNR to 22.4 on InterVerse benchmark

- Reduces RMSSE for roughness/metallic attributes by 41% compared to image-based models

- Generates realistic lighting effects like shadows without explicit 3D geometry NVIDIA Developer Blog

Practical Applications

The technology enables:

- Dynamic relighting: Modify lighting conditions in existing footage

- Material swapping: Change object textures while preserving physical properties

- Object insertion: Add CGI elements with realistic shadows and reflections

- Scene reconstruction: Create editable 3D environments from everyday videos NVIDIA VP Sanja Fidler notes this "brings generative AI to the core of graphics workflows," accelerating VFX production and reducing costs. CVPR 2025 Paper

Industry Impact

DiffusionRenderer arrives amid intense competition in AI video tools, outperforming alternatives like DIELightNet and RGBX in rendering quality. While OpenAI's Sora offers text-to-video generation, NVIDIA's solution provides granular control over scene properties – positioning it as essential infrastructure for creative professionals. The tool could accelerate adoption in AR/VR development and robotic vision systems requiring accurate environmental understanding.

Social Pulse: How X and Reddit View NVIDIA's DiffusionRenderer

Dominant Opinions

-

Creative Enthusiasm (68%): @VFXArtist: "DiffusionRenderer's material editing finally makes AI VFX tools usable for actual production work – the dragon demo is unreal!" r/videos thread: "Inserting CGI into real footage with perfect lighting? This eliminates weeks of compositing work for indie creators."

-

Deepfake Concerns (22%): @AIEthicsWatch: "Photorealistic video manipulation without technical barriers demands urgent watermarking standards before election cycles." r/technology post: "NVIDIA's demo shows a weaponized deepfake future – inserting objects into real footage breaks evidentiary reliability."

-

Technical Skepticism (10%): @CGI_Engineer: "Impressed but wary: Training data limitations could cause artifacts in diverse real-world lighting scenarios outside lab conditions."

Overall Sentiment

While creators celebrate unprecedented editing capabilities, significant concerns persist about authentication and misuse, highlighting the tension between innovation and media integrity.