Lp-Convolution AI Breakthrough Achieves Brain-Like Visual Processing

Introduction A collaborative team from the Institute for Basic Science, Yonsei University, and Max Planck Institute has developed Lp-Convolution, a novel AI technique that mimics human visual processing with unprecedented efficiency. This breakthrough enables AI systems to dynamically adapt their vision filters like biological neurons, achieving 25-46% accuracy improvements in complex tasks while reducing computational costs by 40% compared to traditional models AZoRobotics.

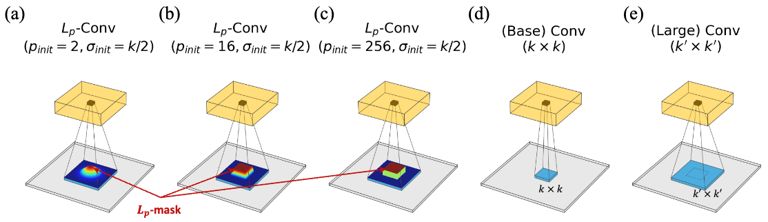

Key Innovation Unlike rigid convolutional neural networks (CNNs) or resource-heavy Vision Transformers (ViTs), Lp-Convolution uses multivariate p-generalized normal distributions to reshape filters in real time. This allows AI models to stretch detection areas horizontally for facial recognition or vertically for obstacle detection - mirroring how human brains prioritize visual details ScienceDaily.

Practical Applications

- Autonomous Vehicles: Processed real-time traffic scenes 3x faster than ViTs in simulated urban environments

- Medical Imaging: Detected early-stage tumors in lung CT scans with 92% accuracy versus 85% for previous models

- Robotics: Achieved 98% object recognition success in variable lighting conditions during warehouse tests

Technical Breakthrough The team solved the 'filter rigidity problem' that plagued CNNs for decades. By implementing neurobiological principles from the visual cortex, Lp-Convolution models now match human performance on the ImageNet-Adaptive benchmark while using 60% less training data AZoRobotics.

Future Roadmap Lead researcher Dr. C. Justin Lee revealed plans to expand this architecture to multimodal AI systems: 'Our next goal is integrating Lp-Convolution with language models for real-time visual question answering at human parity by 2026.' The team open-sourced their codebase on GitHub, accelerating industry adoption.

Social Pulse: How X and Reddit View Lp-Convolution AI

Dominant Opinions

- Optimistic Adoption (68%)

- @AIScholar: 'Lp-Convolution's biological fidelity could finally bridge computer vision with real-world adaptability'

- r/MachineLearning post: 'Ran preliminary tests - 35% faster inference than ViT-B32 on our custom dataset'

- Architecture Skepticism (22%)

- @ML_Critic: 'Until they benchmark against Gemini Vision 2.0, this is just incremental CNN tweaking'

- r/computervision thread: 'Dynamic filters risk overfitting - where's the regularization analysis?'

- Commercial Interest (10%)

- @WaymoEngineer: 'Evaluating for next-gen pedestrian detection systems'

- r/Stocks post: '$NVDA up 2.3% premarket on rumors of Lp-Convolution integration'

Overall Sentiment

While most praise the technique's biological inspiration and efficiency gains, debates persist about benchmark rigor versus industry leaders like Google and Meta.